“But it is a pipe."

"No, it's not," I said. It's a drawing of a pipe. Get it? All representations of a thing are inherently abstract. It's very clever.”

Recently I have an opportunity to learn some POSIXish stuff at my university. It is fantastic to discover how things work at the level of an operating system, the lowest level that a casual programmer can go into great detail about. By reading my previous articles, you could notice that I often try to use e.g. some multithreading. That is one of the things I have learned recently. Another such subject is communication between processes with pipes and FIFOs.

In this article, I will give you a simple explanation about how these means of communication work, and in the end, there will be some curious examples about possibly confusing errors we can get when using pipes and FIFOs.

Pipes

Pipe is a unidirectional data channel that can be used for interprocess communication. I think that this particular name gives a perfect representation of how it looks like from a programmer’s point of view and in a moment you will see why.

Real-world pipes have two ends. You can put something like water into the first end and get it from the second one, which sits elsewhere. Usually, the flow direction is always the same, because we would have some mess if we tried to pump water from both sides at the same time.

The POSIX pipes work similarly. We can create them by calling the pipe function (you will see details later). By doing this, we get two file descriptors, which are the ends of our pipe. Now, it is enough to put one end in one process, and another end in another process. Then, we could use them to write and read any data we want.

But there are some constraints.

Descriptors

The first is that we have only descriptors. Let’s recap, that descriptors are just integers that identify files in a table of opened files of a particular process. It means that for one process the descriptor “4” can mean something else for another process, even if it has also the descriptor “4” in its table. But does it make it impossible to put a pipe between two processes? Not exactly, and this exception is the way we actually use pipes.

Let’s recap another useful thing. The table of open files and their descriptors is copied when a process is forking. In other words, the parent process and the child, just after forking, have exactly the same descriptors to the same files so that we can create a pipe in the parent process, then fork, and then use one of the ends in the parent code and another in the child process. That is how it basically works!

This kind of pipes is also called unnamed pipes. The only way of getting connected by an unnamed pipe is to create it and share a descriptor, which can be made by forking or using sockets. Later in this article, I will tell about named pipes, which do not have this restriction.

Buffer size

Like in real life, there is a limit to the pipe’s capacity. You cannot continuously put something in without putting it out. Every pipe has its buffer, which is managed by an operating system. If we want to send something new when the pipe is still full, we need to wait until some process reads the content of the pipe and it will have enough space for our new message.

There is also a little problem with sending relatively big chunks of data. If our chunk is larger than the buffer, it is split and sent in packets. A simple write of a size not greater than the buffer size is guaranteed to be atomic (nothing can interfere with the data), but multiple writes are not. But what is the problem?

The problem is called concurrency. Assume we have multiple processes writing to the same pipe at the same time. Moreover, one of them has a large message to send, which is far greater than the buffer size. As mentioned above, the message is split into packets and they are written to the pipe. But writing all the packets might not be atomic. It means that between two consecutive packets another process can write its own. Such a situation can lead to errors with data integrity, as we get some rubbish inside our large message.

Data length

The POSIX pipe stores pure bytes, as the real-world pipe stores unconsolidated water. You do not actually know how much water is inside (or how dirty it is) until you open a valve. The POSIX pipes work similarly. You need to try reading the contained data to actually get any information about them. In particular, you do not basically know how many of them are inside or where one message ends and another starts — the content is a pure stream of bytes.

There are some simple solutions to this problem. We can use messages of fixed size, so we always know how many bytes we need to read to get a whole correct message. Another solution could be preceding every message with a byte, which tells how long is the message. Furthermore, we can include more metadata about it, however, we should still keep in mind the concurrency problem and maximal atomic size of a message.

Broken pipes

There is always a moment when we would like to close the pipe because e.g. it is not needed anymore. In such a situation, we just need to use the close function on the descriptor we want to get rid of. The pipe can be also closed when one of the processes using it is getting killed.

But what happens when all the reading descriptors are closed, but some process still tries to write? Or analogously, when no one can write anymore, but there is still a process that would like to read?

The first situation: we are trying to write to a pipe which has all output descriptors closed. In effect, the write function will return -1 and set errno to EPIPE. Moreover, our process will receive the SIGPIPE signal. It can be potentially dangerous, as, by default, this signal kills the process. If we want to prevent such a situation, we need to ignore the signal, block it, or handle it.

The second situation is less critical. When we are trying to read from a pipe whose writing end is closed, we can still read all the data remaining inside, and then, the read function just returns 0 to indicate end-of-file. No signal is sent nor any error occurs.

Furthermore, when all the descriptors of a pipe are closed, it is not possible to use it anymore and all the data inside it is lost, so it is recommended to read all of them before closing the last output if we do not want to lose any data.

Closing pipes incautiously is dangerous, but remaining them unnecessarily open could also pose a risk. If we do not use the pipe, but we forget to close any writing end (e.g. in some reading process), the read function can block our program still waiting for data.

Okay, let’s see how to use pipes

Opening a new pipe is as easy as calling the function pipe, which has the following syntax:

int pipe(int fildes[2]);The argument is an array where this function puts the file descriptors for writing and reading (filedes[0] and filedes[1] respectively, like standard streams numbers), and it returns 0 upon success or -1 when an error occurs. You can find more information about possible errors in manpages. Closing an open end of the pipe can be done by the close function called on the respective descriptor.

Communication using pipe can use e.g. write and read, or fprintf and fscanf, it depends on what we want to do. However, it is important to know how read and write behave with pipes in different situations.

Writing

As mentioned above, we can write to pipe atomically if our data are no greater than the minimal buffer size. This limit is defined in the constant PIPE_BUF in header <limits.h>. The minimum for the buffer defined by POSIX is 512 bytes, however, in Linux, it should be 4kB. Messages that are longer will be split into multiple packets and it is possibly dangerous in case of concurrency when writing to the same pipe from multiple sources.

When our written data are bigger than remaining space in a pipe, the writing thread will be by default blocked until the data can be written. The situation changes when we set the O_NONBLOCK flag (which should be clear by default). Then write does not block the thread, but in general, it tries to write as many as possible and returns the amount of actually written data or throws the EAGAIN error. More about that is of course in manpages.

A possibly dangerous situation happens when we try to write into a pipe which has all the outputs closed. As a result, write returns -1 and sets errno to EPIPE and the signal SIGPIPE is sent to the process, which kills by default.

Reading

Reading is slightly easier, as there are almost no critical problems possible. If some process has the pipe open for writing and O_NONBLOCK is clear, read blocks the calling thread until some data is written or the pipe is closed by all processes that had the pipe open for writing. With O_NONBLOCK flag, when there is nothing to read, read returns -1 and sets errno to EAGAIN. In case of reading from a pipe with all writing ends closed, read returns 0 indicating end-of-file.

Code!

A trivial example with a pipe. The program should create another process, and then, every second a message is sent to it and displayed.

#include <stdio.h>

#include <unistd.h>

#include <stdlib.h>

int main()

{

int pipefd[2];

if(pipe(pipefd)) exit(1);

pid_t pid = fork();

if(pid < 0)

{

exit(1);

}

else if(pid == 0)

{

//Child

close(pipefd[0]);

for(int i = 0; i < 8; i++){

sleep(1);

write(pipefd[1], "Lorem ipsum", 12);

}

close(pipefd[1]);

}

else

{

//Parent

char b[12];

close(pipefd[1]);

while(read(pipefd[0], b, 12) > 0)

{

printf("%s\n", b);

}

close(pipefd[0]);

}

return 0;

}This example seems extremely easy, but to be frank, when I had written it for the first time, it did not work. I confused descriptors. That is why I would recommend using some conventions to name them instead of using 0 and 1 to avoid confusion. We can call them like this:

int pipefd[2];

if(pipe(pipefd)) exit(1);

int piper = pipefd[0];

int pipew = pipefd[1];What are the pipes useful for?

Obviously, they are useful for communication between related processes or threads in the same process. For example, we can implement a thread pool using pipes (another possible implementation can use e.g. conditional variables and shared memory). If you do not know what it is, I am coming with a short explanation. Thread pool is a pattern of parallel programming when we would like to distribute many tasks between threads, but we do not want to create an individual thread for each one of them because of performance reasons — creating a new thread is expensive. We can create a bunch of threads at the beginning of our program, and then let them sleep. If there is something to do, we wake up some of them and distribute the work. As they already exist, they can start immediately and work. I have already used some simple kind of this pattern in my post about workers in Node.js.

I suppose now you know how to use pipes to obtain this pattern. For every thread we create a pipe, the thread calls read and waits for some task coming through the pipe from the main thread. The task could be almost anything, as we can, for example, send a pointer to a function we would like the thread to call, we can send a pointer to arguments, etc. After the work is done, the thread can send a result using another pipe. Powerful, isn’t it?

FIFO

FIFO (First In First Out) is another way of communication between processes. From the programmer’s point of view, it is similar to the previously mentioned unnamed pipes. FIFOs are actually called named pipes. Each FIFO has its own file in the filesystem, and the FIFO’s name is just the file name. Every process, that has permission to open such a file, can use the FIFO represented by that file.

Main differences between a FIFO and a pipe

- Pipe is opened using the

pipefunction. FIFO is opened like a casual file, usingopen, however, before using it, we need to create it with the functionmkfifo. Moreover, opening a FIFO blocks the opening thread by default until some second end is opened elsewhere. It means that if our thread opens the FIFO for writing, it has to wait until another opens it for reading and vice-versa. Of course, we can use theO_NONBLOCKflag to prevent this behavior. - Pipe disappears when no one uses it, FIFO remains in the file system for later use.

- Pipe can be used by related processes, FIFO can be used by any process which has permission to read or write the particular FIFO file. It means that the processes using FIFO do not need to be related at all.

Using FIFOs

In the beginning, we need to create a FIFO file using the mkfifo function from the <sys/stat.h> header. Here is its signature:

int mkfifo(const char *path, mode_t mode);The path argument is just a path and name of the FIFO’s file. Moreover, we can use flags or octal constants in mode to grant particular permissions for that file and our named pipe. Here, in the description, you can find more about possible flags and permissions. Notice that an attempt to create a file that already exists will cause the EEXISTS error. More about mkfifo in manpages.

The next step is opening the FIFO for reading and writing. We can do it with the open function. As I stated above, opening FIFO can block the opening thread until the opposite end is also open if we do not set the O_NONBLOCK flag. As you can suppose, it is the safer alternative if we do not want to block our thread forever because of some unpredictable conditions which can happen at the opposite end of FIFO.

After opening it, we can use it with write and read as we have a descriptor. For a programmer, FIFOs should work the same way as unnamed pipes.

In order to tell our operating system that we will not use the FIFO in our thread anymore, we need to close it like a normal file, but we should remember that this can brings the same consequences as closing a normal pipe. However, closing the FIFO does not remove it from our filesystem. It means that the FIFO file remains there for future use and an attempt to create the same FIFO again will throw an error. We can remove the FIFO file using unlink.

Code!

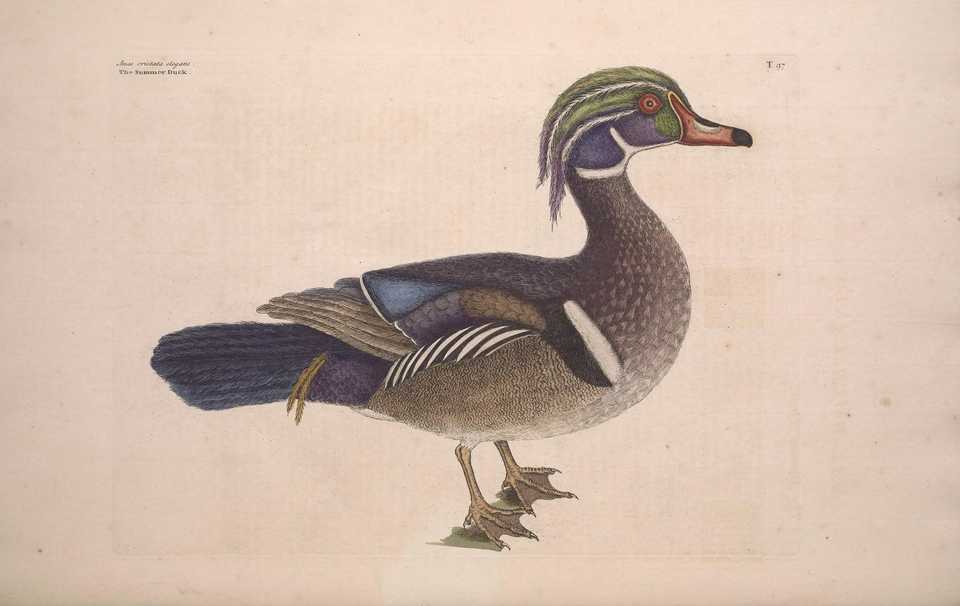

Just kidding, no code, sorry. When I am writing this, it is 1 a.m., that is not a good time for any coding, even examples. A XVIIIth century drawing of a duck instead.

What can go wrong

I suppose that pipes and FIFOs sometimes can be pretty hard to debug. It is easy to confuse descriptors, forget about closing something, or close something unintentionally. Here we have some examples of a code which at the first glace should work, but actually, it does not. Can you guess what is wrong?

Example #1

Let’s assume we want to use two FIFOs to obtain duplex communication between two programs, so we open the FIFO files (which already exist)…

//FILE1.c

int main(void)

{

int fd1, fd2;

///...

if((fd1 = open(”2to1”, O_RDONLY)) < 0)

{

perror("open");

return 1;

}

if((fd2 = open(”1to2”, O_WRONLY)) < 0)

{

perror("open");

return 1;

}

/* Read from fd1 and writing to fd2 */

return 0;

}//FILE2.c

int main(void)

{

int fd1, fd2;

///...

if((fd1 = open(”1to2”, O_RDONLY)) < 0)

{

perror("open");

return 1;

}

if((fd2 = open(”2to1”, O_WRONLY)) < 0)

{

perror("open");

return 1;

}

/* Reading from fd1* and writing to fd2 */

return 0;

}There is a deadlock. The first process is trying to open the 2to1 pipe for reading and is getting blocked because the writing end has not been opened yet. The second process is trying to do the same with 1to2, but it has to wait as well. As a consequence, both processes are waiting for each other and they cannot do anything now. In order to avoid this, we could switch around the ifs in one of the programs. Then, we will try to open the same pipe at the same time and no deadlock should happen. Another solution would be to use the non-blocking opening of e.g. reading ends of the pipes.

Example #2

Now, we want to send some strings through a pipe. Okay, strings, so it would be useful to use fprintf and fscanf…

#include <unistd.h>

#include <stdlib.h>

#include <stdio.h>

int main()

{

int fd[2];

pipe(fd); //No error handling for simplicity here

FILE *reader = fdopen(fd[0], "r");

FILE *writer = fdopen(fd[1], "w");

pid_t p = fork();

if (p > 0)

{

//Parent

int result;

fscanf(reader, "Result %d", &result);

printf("Result computed by child: %d\n", result);

}

else

{

//Child

fprintf(writer, "Result %d", 123);

fflush(writer);

}

//Closing the pipe etc.

return 0;

}…but nothing happens again!

To understand this, we need to know how fscanf works. The key here is that it waits for a newline character before return. But we do not send any newline character nor close the pipe, so it waits, and waits, and waits… To fix this code, it is enough to put \n at the end of the "Result %d" string.

It is good to remember about such particular features of the high-level I/O functions, as such situations as above can be pretty hard to debug and confusing.

Example #3

In this example, we want to do something when our communication through a pipe has just finished by handling the SIGPIPE signal…

#include <stdio.h>

#include <stdio.h>

#include <unistd.h>

#include <signal.h>

void got_sigpipe(int signal)

{

printf("Pipe is closed!\n");

}

int main() {

signal(SIGPIPE, got_sigpipe);

int fd[2];

pipe(fd);

pid_t p = fork();

if (p > 0)

{

//Parent stops listening

close(fd[0]);

}

else

{

//Child writes something, this can work

write(fd[1], "One", 3);

sleep(2);

//And this should cause SIGPIPE, but...

write(fd[1], "Two", 3);

printf("Okay\n");

}

return 0;

}…but it never comes! We closed the listening end before writing, so writing should cause the infamous SIGPIPE.

If we want to get SIGPIPE, we have to close all the reading ends. Our main process has forked, so we have actually two reading ends of the pipe available. One of them is closed in the parent process, but that one in the child part is never closed, so the child is writing and it is capable of reading as well. There is no point for SIGPIPE here.

Example #4

Okay, the last example, so it is not so bad. It can work actually…

// Program 1

int main()

{

int fd = open("fifo", O_RDWR | O_TRUNC);

write(fd, "Hello!", 7); //with null character

close(fd);

return 0;

}

//Program 2

int main()

{

char buffer[7];

int fd = open("fifo", O_RDONLY);

read(fd, buffer, 7);

printf("%s\n", buffer);

return 0;

}…but sometimes it cannot! Do you know why?

When something with multiple processes sometimes works and sometimes does not, it can be a case with a race condition. This situation is not different. Let’s analyze how the code can execute.

One possibility is that the first program opens the pipe (to read and write, so there is no blocking here), writes, then the second opens the pipe, reads, and everything is fine.

But there is another possibility: The first program opens the pipe, writes, and closes it. Then the second tries to open it and it is blocked, as no one can write anymore. Nothing happens then, and we have a problem. Tricky example, isn’t it? If we want to avoid such a situation, we could use a non-blocking opening, or make sure when writing code, that the second end will be eventually opened as well.

END-OF-FILE

There is some more interesting usage of pipes and FIFOs like the popen function, which creates a new shell subprocess with a ready-to-serve pipe, or possible usage of FIFOs instead of temporary files, but this article has grown to much to contain all that stuff.

If you spotted any mistake in this post, please let me know about it.

Thank you for your patient reading and I hope that knowledge above will be useful and will help some people with a better understanding of the wonderful world of operating systems.